45 machine learning noisy labels

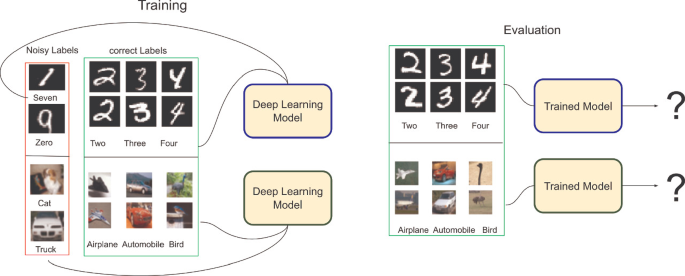

Learning from Noisy Labels with Deep Neural Networks - arXiv by H Song · 2020 · Cited by 277 — As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels(robust training) is becoming an important ... Deep learning with noisy labels: Exploring techniques and remedies in ... There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention. Recent studies have shown that label noise can significantly impact the performance of deep learning models in many machine learning and computer vision applications.

Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Machine learning noisy labels

Event-Driven Architecture Can Clean Up Your Noisy Machine Learning Labels Machine learning requires a data input to make decisions. When talking about supervised machine learning, one of the most important elements of that data is its labels . In Riskified's case, the ... [2012.03061] A Survey on Deep Learning with Noisy Labels by FR Cordeiro · 2020 · Cited by 25 — Abstract: Noisy Labels are commonly present in data sets automatically collected from the internet, mislabeled by non-specialist annotators, ... Machine Learning Glossary | Google Developers Oct 14, 2022 · The term "convolution" in machine learning is often a shorthand way of referring to either convolutional operation or convolutional layer. Without convolutions, a machine learning algorithm would have to learn a separate weight for every cell in a large tensor. For example, a machine learning algorithm training on 2K x 2K images would be forced ...

Machine learning noisy labels. QActor: Active Learning on Noisy Labels - PMLR %0 Conference Paper %T QActor: Active Learning on Noisy Labels %A Taraneh Younesian %A Zilong Zhao %A Amirmasoud Ghiassi %A Robert Birke %A Lydia Y Chen %B Proceedings of The 13th Asian Conference on Machine Learning %C Proceedings of Machine Learning Research %D 2021 %E Vineeth N. Balasubramanian %E Ivor Tsang %F pmlr-v157-younesian21a %I PMLR %P 548--563 %U ... Learning with Noisy Labels - NIPS papers The theoretical machine learning community has also investigated the problem of learning from noisy labels. Soon after the introduction of the noise-freePAC model, Angluin and Laird [1988] proposed the random classification noise (RCN) model where each label is flipped independently with some probability ρ∈[0,1/2). Top 20 Dataset in Machine Learning | ML Dataset | Great Learning Sep 06, 2022 · Dataset is the base and first step to build a machine learning applications.Datasets are available in different formats like .txt, .csv, and many more. For supervised machine learning, the labelled training dataset is used as the label works as a supervisor in the model. How Noisy Labels Impact Machine Learning Models - KDnuggets While this study demonstrates that ML systems have a basic ability to handle mislabeling, many practical applications of ML are faced with complications that make label noise more of a problem. These complications include: Not being able to create very large training sets, and Systematic labeling errors that confuse machine learning.

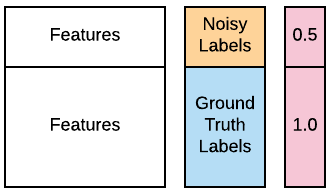

PDF Learning with Noisy Labels - Carnegie Mellon University The theoretical machine learning community has also investigated the problem of learning from noisy labels. Soon after the introduction of the noise-freePAC model, Angluin and Laird [1988] proposed the random classification noise (RCN) model where each label is flipped independently with some probability ρ∈[0,1/2). Data Noise and Label Noise in Machine Learning Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3). This follows the real-world scenario that labels are randomly corrupted, as also the order of labels in datasets is random [6]. 3 — Own image: asymmetric label noise Asymmetric Label Noise Single Label 3.6. scikit-learn: machine learning in Python — Scipy lecture ... 3.6.2.2. Supervised Learning: Classification and regression¶ In Supervised Learning, we have a dataset consisting of both features and labels. The task is to construct an estimator which is able to predict the label of an object given the set of features. machine learning - Classification with noisy labels ... - Cross Validated Let p t be a vector of class probabilities produced by the neural network and ℓ ( y t, p t) be the cross-entropy loss for label y t. To explicitly take into account the assumption that 30% of the labels are noise (assumed to be uniformly random), we could change our model to produce p ~ t = 0.3 / N + 0.7 p t instead and optimize

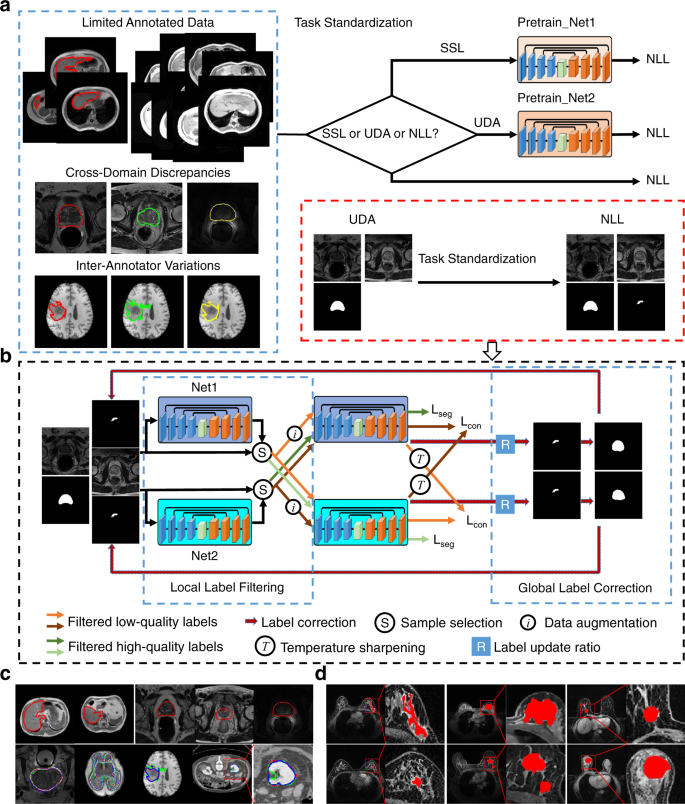

Tongliang Liu's Homepage We are broadly interested in the fields of trustworthy machine learning and its interdisciplinary applications, with a particular emphasis on learning with noisy labels, adversarial learning, transfer learning, unsupervised learning, and statistical deep learning theory. We are recruiting PhD and visitors. [2202.08436] PENCIL: Deep Learning with Noisy Labels - arXiv by K Yi · 2022 — Abstract: Deep learning has achieved excellent performance in various computer vision tasks, but requires a lot of training examples with ... Researchers leverage new machine learning methods to learn ... Oct 12, 2022 · For example, in medical analysis, domain expertise is required to label medical data, which may suffer from high inter- and intra-observer variability, resulting in noisy labels. These noisy labels will deteriorate the model performance, which might affect the decision-making process that impacts human health negatively. Thus it is necessary to ... [P] Noisy Labels and Label Smoothing : MachineLearning It's safe to say it has significant label noise. Another thing to consider is things like dense prediction of things such as semantic classes or boundaries for pixels over videos or images. By their very nature classes may be subjective, and different people may label with different acuity, add to this the class imbalance problem. level 1

Learning from Noisy Labels with No Change to the Training Process - PMLR %0 Conference Paper %T Learning from Noisy Labels with No Change to the Training Process %A Mingyuan Zhang %A Jane Lee %A Shivani Agarwal %B Proceedings of the 38th International Conference on Machine Learning %C Proceedings of Machine Learning Research %D 2021 %E Marina Meila %E Tong Zhang %F pmlr-v139-zhang21k %I PMLR %P 12468--12478 %U https ...

GitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · Learning from Noisy Labels via Dynamic Loss Thresholding. Evaluating Multi-label Classifiers with Noisy Labels. Self-Supervised Noisy Label Learning for Source-Free Unsupervised Domain Adaptation. Transform consistency for learning with noisy labels. Learning to Combat Noisy Labels via Classification Margins.

How to handle noisy labels for robust learning from uncertainty In summary, there are four main factors that can contribute to the effective handling of noisy labels: "small-loss", "double", "cross update" and "divergence". Our UACT is motivated by five main factors to achieve the best performance.

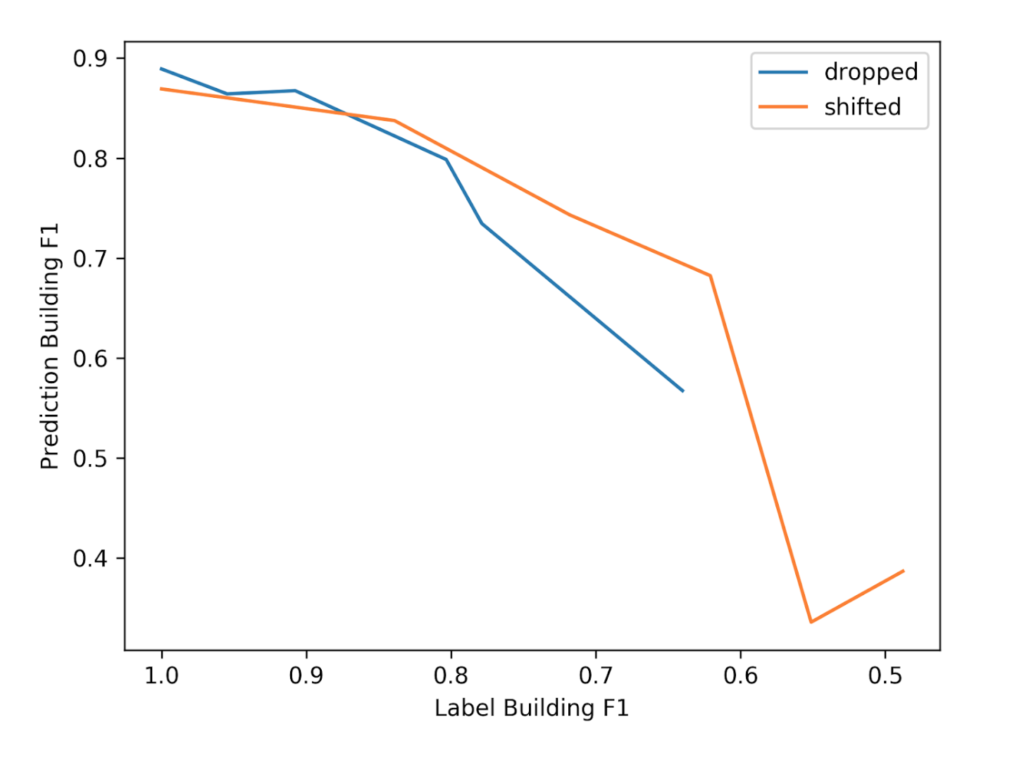

Using Noisy Labels to Train Deep Learning Models on Satellite ... - Azavea Using Noisy Labels to Train Deep Learning Models on Satellite Imagery. Deep learning models perform best when trained on a large number of correctly labeled examples. The usual approach to generating training data is to pay a team of professional labelers. In a recent project for the Inter-American Development Bank, we tried an alternative ...

Data-Centric Approach vs Model-Centric Approach in Machine ... Jul 22, 2022 · For example, If data scientist 1 labels pineapple separately but data scientist 2 labels it combined, the data will be incompatible, causing the learning algorithm to grow confused. The main goal is to maintain consistency in labels; if you’re labeling it independently, make sure all labels are labeled the same way.

Co-learning: Learning from Noisy Labels with Self-supervision by C Tan · 2021 · Cited by 11 — Abstract: Noisy labels, resulting from mistakes in manual labeling or webly data collecting for supervised learning, can cause neural networks to overfit ...

Early-Learning Regularization Prevents Memorization of Noisy Labels We propose a novel framework to perform classification via deep learning in the presence of noisy annotations. When trained on noisy labels, deep neural networks have been observed to first fit the training data with clean labels during an "early learning" phase, before eventually memorizing the examples with false labels. We prove that early learning and memorization are fundamental phenomena ...

Learning from Noisy Labels with Deep Neural Networks - arXiv As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective.

GitHub - richtertill/noisy_machine_learning: Experiment to include ... Installation Clone this repository. git clone Usage This application is free to be used and extended in order to create attention on reliable machine learning. License Licensed under the MIT license Contributing Any contribution is appreciated. A lot of spaces remain open.

PDF Selective-Supervised Contrastive Learning With Noisy Labels 3 Trustworthy Machine Learning Lab, The University of Sydney, Australia flishikun,geshimingg@iie.ac.cn, xxia5420@uni.sydney.edu.au, tongliang.liu@sydney.edu.au ... There are a large body of recent works on learning with noisy labels, which include but do not limit to estimating the noise transition matrix [9,20,53,54], reweighting ex- ...

GitHub - subeeshvasu/Awesome-Learning-with-Label-Noise: A ... 2021-IJCAI - Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. 2022-WSDM - Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels. 2022-Arxiv - Multi-class Label Noise Learning via Loss Decomposition and Centroid Estimation.

A Convergence Path to Deep Learning on Noisy Labels This article aims to reveal a convergence path of a trained model in the presence of label noise, and here, the convergence path depicts the evolution of a trained model over epochs. We first...

Deep learning with noisy labels: Exploring techniques and remedies in ... Most of the methods that have been proposed to handle noisy labels in classical machine learning fall into one of the following three categories ( Frénay and Verleysen, 2013 ): 1. Methods that focus on model selection or design. Fundamentally, these methods aim at selecting or devising models that are more robust to label noise.

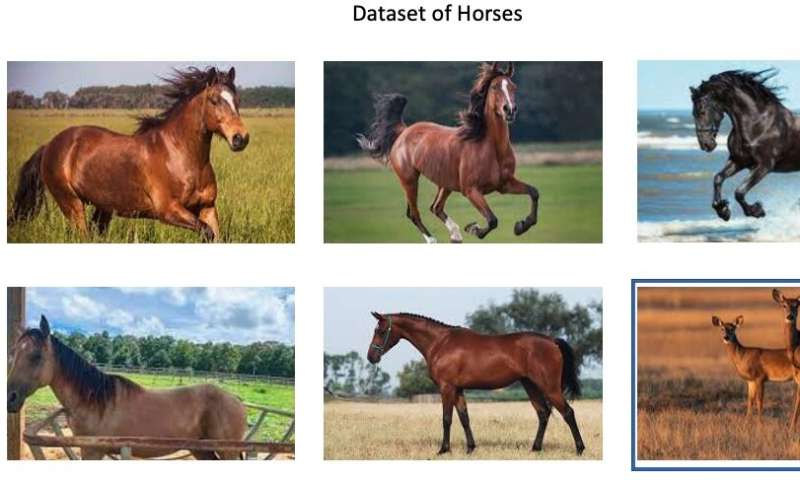

machine learning - What exactly is label noise? - Computer Science ... Supervised machine learning algorithms train classification algorithms using labelled data. The labels in the training set are typically manually generated by humans, who sometimes mislabel data. This is known as label noise. Label noise is usually the result of honest mistakes, but sometimes occurs out of malice.

Noisy Labels: Theoretical Approaches/Empirical Studies Description: A machine learning system continuously observes noisy training annotations and it remains a challenge to perform robust training in such scenarios. Earlier and classical approaches rely on estimation processes to understand the noise rate of the labels and then leverage this knowledge to perform label correction, loss correction ...

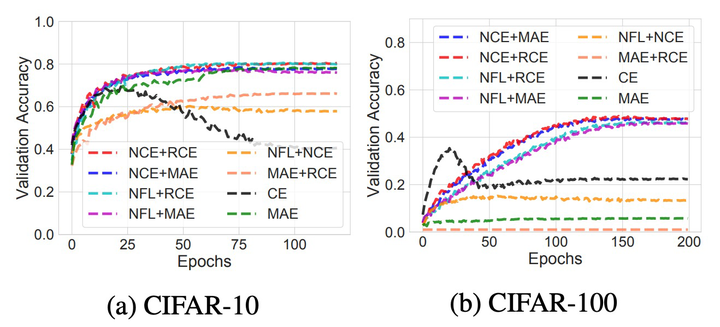

On Learning Contrastive Representations for Learning with Noisy Labels On Learning Contrastive Representations for Learning with Noisy Labels Li Yi, Sheng Liu, Qi She, A. Ian McLeod, Boyu Wang Deep neural networks are able to memorize noisy labels easily with a softmax cross-entropy (CE) loss. Previous studies attempted to address this issue focus on incorporating a noise-robust loss function to the CE loss.

Example -- Learning with Noisy Labels - Stack Overflow Cleaning up the labels would be prohibitively expensive. So I'm left to explore "denoising" the labels somehow. I've looked at things like "Learning from Massive Noisy Labeled Data for Image Classification", however they assume to learn some sort of noise covariace matrix on the outputs, which I'm not sure how to do in Keras.

How Noisy Labels Impact Machine Learning Models | iMerit Supervised Machine Learning requires labeled training data, and large ML systems need large amounts of training data. Labeling training data is resource intensive, and while techniques such as crowd sourcing and web scraping can help, they can be error-prone, adding 'label noise' to training sets.

Machine Learning Glossary | Google Developers Oct 14, 2022 · The term "convolution" in machine learning is often a shorthand way of referring to either convolutional operation or convolutional layer. Without convolutions, a machine learning algorithm would have to learn a separate weight for every cell in a large tensor. For example, a machine learning algorithm training on 2K x 2K images would be forced ...

[2012.03061] A Survey on Deep Learning with Noisy Labels by FR Cordeiro · 2020 · Cited by 25 — Abstract: Noisy Labels are commonly present in data sets automatically collected from the internet, mislabeled by non-specialist annotators, ...

Event-Driven Architecture Can Clean Up Your Noisy Machine Learning Labels Machine learning requires a data input to make decisions. When talking about supervised machine learning, one of the most important elements of that data is its labels . In Riskified's case, the ...

![PDF] Image Classification with Deep Learning in the Presence ...](https://d3i71xaburhd42.cloudfront.net/33a2e0c7ea17031f4e6f28496a9b8f3222cb2904/4-TableI-1.png)

![PDF] A Survey on Deep Learning with Noisy Labels: How to ...](https://d3i71xaburhd42.cloudfront.net/ec0ef2e2df03c89d5343f0f07c387d477a20da0d/2-Figure1-1.png)

![P] cleanlab: accelerating ML and deep learning research with ...](https://external-preview.redd.it/RXmK5qbaThHfiuz1h2SoPJJoczjpZfzgqmwc3jRiVaA.png?width=640&crop=smart&auto=webp&s=5252ad5ba766111ac8e7f636f13195625dd18e37)

![D] Generalization from Noisy Labels : r/MachineLearning](https://external-preview.redd.it/v5muIaM7VsGH2Dqg9n3PIOaZ4j4XsHcywOLzhSDqUpY.jpg?auto=webp&s=0a99d33589862aa512e8b24583f839910cb0f2c4)

![PDF] Deep learning with noisy labels: exploring techniques ...](https://d3i71xaburhd42.cloudfront.net/11f7ca99836c0151dae9d22df5cff874f42992e2/1-Figure1-1.png)

Post a Comment for "45 machine learning noisy labels"